It’s 2025, and if you’re still treating Tesla’s Full Self-Driving as some futuristic pipe dream, you haven’t been paying attention, or you're deliberately ignoring the steady march of software taking the wheel. With Tesla’s Robotaxi service now living in Austin, Houston, and parts of Arizona, the autonomy conversation has shifted from speculation to logistics.

Cars without drivers are picking up fares, navigating pre-surveyed streets under the watchful eye of Version 13.3. It’s not sci-fi anymore, it’s deployment. But as any veteran road-tripper will tell you, even the best map can’t predict every detour. Ten days ago, one longtime Tesla owner shared a harrowing moment that reminded us this technology, no matter how impressive, still demands human vigilance.

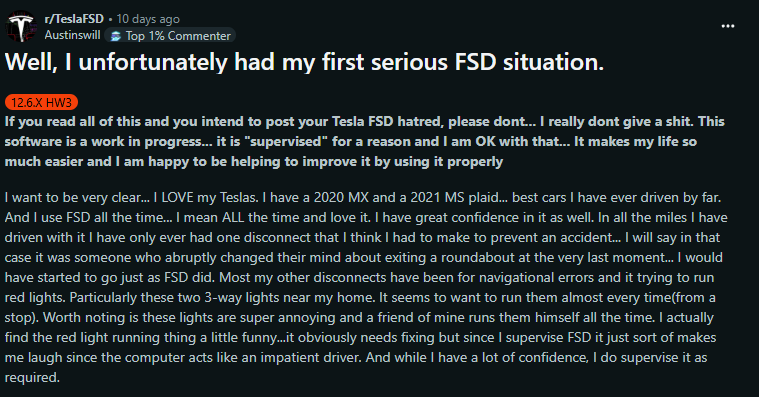

“If you read all of this and you intend to post your Tesla FSD hatred, please don't... I really dont care. This software is a work in progress... it is "supervised" for a reason and I am OK with that... It makes my life so much easier, and I am happy to be helping to improve it by using it properly

I want to be very clear... I LOVE my Teslas. I have a 2020 MX and a 2021 MS plaid... best cars I have ever driven by far. And I use FSD all the time... I mean ALL the time and love it. I have great confidence in it as well. In all the miles I have driven with it, I have only ever had one disconnect that I think I had to make to prevent an accident... I will say in that case it was someone who abruptly changed their mind about exiting a roundabout at the very last moment... I would have started to go just as FSD did. Most of my other disconnects have been for navigational errors and trying to run red lights.

Particularly, these two 3-way lights are near my home. It seems to want to run them almost every time(from a stop). Worth noting is that these lights are super annoying, and a friend of mine runs them himself all the time. I actually find the red light running thing a little funny...it obviously needs fixing, but since I supervise FSD, it just sort of makes me laugh since the computer acts like an impatient driver. And while I have a lot of confidence, I do supervise it as required.

Well, the other day I came across some train tracks. The bars came down as all the red lights started flashing. The MSP came to a stop just as a human would. I will say I was impressed, I had never been in that exact situation, which is amazing because there is another set of tracks I almost ALWAYS get stuck waiting for a public transit train that runs often. This, however, was a track for a normal industrial train. Well, I was sitting there, stopped and waiting for the train, and the FSD decided to go forward. And it didn't feel like the typical creeping forward it will sometimes do at a red light, it seemed like it was going to blast right through that stop bar. I jumped on the brakes pretty quickly, and as I came to a stop, I looked left, and that train was in my face.

Now, I don't know if FSD would have ultimately gone through that stop bar or not; it may have stopped, but I simply couldn't afford to wait and see like I often do. But what I do know is if it had gone through, there would have been a collision with not only the stop bars but the train as well. I did a voice report, of course. I have video and will post it if people really want to see, but it is as described.

What I find perplexing is that the issues I am seeing all have to do with its behavior when stopped at red lights... It is and always has been amazing while at speed... Heck, after this happened a few days later (yesterday actually,) FSD avoided a collision with a truck while I was going down the road, my MS and the truck started to merge together, and the FSD handled the evasion perfectly and smoothly.

I have a few questions.

Is there a way to alert Tesla to especially serious disconnects like this?

Does Tesla also use "negative training" examples for the AI? I think this means showing AI examples of what NOT to do. And an event like this should definitely qualify as something NOT to do.

It has been a while since an FSD upgrade...any guesses on the next update?

EDIT: OK, here is the Clip

EDIT 2: After watching the clip several times... I think I see what happened... The FSD came to a stop as the bars came down... They oscillated for a moment, then became still... beyond them, you can see the green traffic light. I think once those bars stopped moving the FSD was seeing the green light and thought it could go.”

This is not a smear piece. Quite the opposite. What makes this Reddit post from user Austinswill so compelling isn’t just the near-miss with a freight train, but the unwavering support he maintains for the platform. A software fluke nearly put him in a life-ending scenario, and his response wasn’t rage; it was reflection. He’s a true believer, one of Tesla’s millions of real-world beta testers. And his report carries weight precisely because it isn’t alarmist. He pulled the brake in time. The system kept learning. But it also underscored a critical truth: even the most advanced semi-autonomous system is only as safe as the human mind shadowing it.

Inside Tesla’s 2025 Robotaxi Pilot: $4.20 Rides, Safety Monitors & Camera-Only Sensors

- Tesla launched a pilot robotaxi service on June 22, 2025, using a fleet of ~10–20 Model Y vehicles operating within a geofenced area in South Austin, Texas

- Rides cost a promotional flat fee of $4.20 and currently include a “safety monitor” passenger who can intervene but doesn’t drive

- The system relies solely on eight cameras powered by FSD AI, no radar or lidar. This approach simplifies hardware but draws criticism compared to Waymo’s multi‑sensor setup

- Videos show erratic behavior, phantom braking, wrong‑lane entries, and led to at least one parking‑lot accident, after which a Tesla supervisor took control

That point didn’t go unnoticed in the comments. As user Quercus_ put it, “It tried to kill me, but don’t dare criticize it.” Behind the sarcasm is a measured take: FSD is undeniably a marvel of modern engineering, but it’s also still prone to errors in high-context, low-speed situations.

We’re talking railroad crossings, complex intersections, and temporary construction zones, where signals conflict and a single misread could mean disaster. As another commenter, InfamousBird3886, noted, “Driving at speed in environments with few agents is comparatively easy for AVs… dense scenes, intersections, crosswalks... are much more challenging.”

That challenge isn't theoretical. Another user, Royal_Information929, described a strikingly similar event in Indiana, also involving a rail crossing and a momentary lapse in FSD’s judgment. And these aren’t isolated cases

When FSD Misreads Signals: How Tesla Struggles at Intersections & Train Barriers

But in the messy, often ambiguous ballet of urban infrastructure, the system still occasionally dances out of rhythm. Chris_Apex_NC chimed in with an honest take: “13.2 isn’t up to being unsupervised,” noting that while low-level human concentration might be enough most of the time, that sliver of inattention during the wrong few seconds can be a deal-breaker.

Here’s the kicker: the very next day after his train incident, Austinswill’s same Model S Plaid, running the same software, flawlessly avoided a collision with a merging truck. The evasive maneuver was smooth, timely, and better than most drivers might manage themselves. That’s the paradox. FSD’s ceiling is higher than the average motorist's, but its floor, though rare, can still drop below zero. It's a swing that highlights both the breathtaking potential of the technology and the unforgiving margin for error.

Tesla’s development model leans heavily on supervised real-world miles. Every drive is a data point. But what remains elusive is transparency. Tesla asserts that FSD is safer than human drivers, but there’s no public, third-party-reviewed dataset to back that up. As Quercus_ rightly noted,

“Safer on average would have been small comfort... if you hadn’t slammed on the brakes.”

How Tesla FSD Works: Camera-Only Neural Networks, Real-World Data & OTA Updates

- FSD uses neural networks that process data from eight external cameras to interpret roads, obstacles, traffic signals, and more, eschewing radar or lidar

- Tesla's running FSD collects real‑world driving data, which is sent back to Tesla to refine the AI models; improvements are deployed via over-the-air software updates.

- While marketed as “Full Self‑Driving,” current deployments (like in robotaxis) involve human monitors—roughly L2–L3 autonomy, not yet fully driverless.

- In robotaxi mode, vehicles are centrally monitored via fleet operations, enabling remote teleoperators to assist in tricky situations

What Austinswill and others are asking isn’t for perfection, but for visibility. Is there a process to flag especially dangerous false positives? Are such events incorporated into training as “don’t do this” lessons? As much as Tesla has disrupted how we think about mobility, it's also challenged how we evaluate risk. Most of these moments never make headlines because they’re intercepted by humans doing exactly what the system demands: paying attention, supervising, ready to override.

That partnership is the key. Tesla FSD, for all its brilliance, is not yet driving alone. It needs us, just as we need it to continue evolving. And maybe that’s the most honest thing we can say about autonomy right now. It’s a relay race, not a sprint. The car takes the baton, and runs beautifully for miles, but every so often, at a flashing red light or a rising train barrier, it passes it back. The future may well be driverless. But for now, the best thing on the road is still a human who knows when to take the wheel.

Image Sources: Tesla Media Center

Noah Washington is an automotive journalist based in Atlanta, Georgia. He enjoys covering the latest news in the automotive industry and conducting reviews on the latest cars. He has been in the automotive industry since 15 years old and has been featured in prominent automotive news sites. You can reach him on X and LinkedIn for tips and to follow his automotive coverage.

Set as google preferred source