His Tesla Model 3's FSD system took the wheel, but it almost took him into another country. Read the complete, frantic account of how one wrong exit nearly required a passport.

As the Senior Reporter for Torque News, I have spent decades analyzing the automotive industry's most significant technological shifts. The Tesla Model 3 is the most critical vehicle in this current revolution, representing the electric dream translated into mass-market reality.

However, the Model 3's pioneering role also places it on the bleeding edge of software development, where technological ambition frequently collides with the messy reality of public roads. A recent, alarming story from a Model 3 owner has highlighted the high-stakes risk involved when drivers delegate trust to an imperfect system, specifically detailing a navigational error that could have led to a serious international incident.

The discussion, which began circulating on the Tesla Model 3 and Model Y Owners Club Facebook page, centered on the critical, yet still supervised, system known as Full Self-Driving (FSD). It's an indispensable technology for many owners, making long commutes manageable, but this latest anecdote serves as a potent reminder of the driver's ultimate, non-negotiable responsibility.

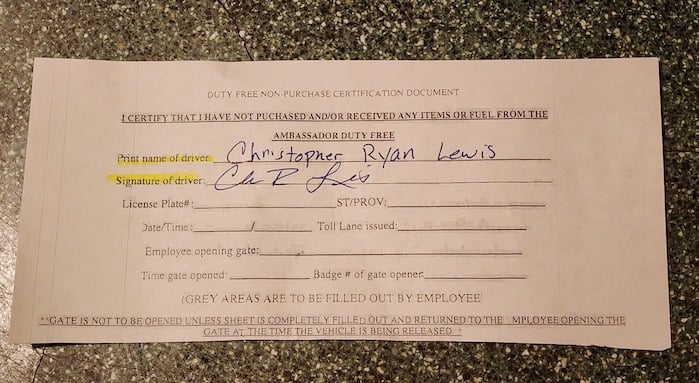

Christopher Ryan Lewis's post paints a vivid picture of how quickly a moment of inattention can turn into a serious dilemma when software takes the reins. The Model 3 owner exclaims,

"I am a Tesla Model 3 owner. Today I wasn't paying attention, and FSD took the wrong exit. Next thing I know, I'm on my way to cross the bridge into Canada with no place to turn around!!! Good thing I stopped and asked for help at the duty-free!"

Don't Fall Asleep With FSD

This anecdote, simple as it is, speaks volumes about the inherent dangers nestled within the current state of advanced driver-assistance systems. While FSD promises a tranquil, hands-off experience, the incident experienced by Christopher Ryan Lewis—the owner in question—demonstrates that the system is, at best, an advanced co-pilot that requires constant, vigilant supervision. The move by the FSD system to take the wrong exit is a classic navigational glitch, one that might be annoying in a suburban setting but becomes critically dangerous near complex infrastructure like international border crossings. When a car is put onto a one-way road leading directly into customs, the potential for a severe and potentially embarrassing situation skyrockets, especially given the strict legal implications of border crossing protocols.

What's most troubling about this story is how quickly the FSD system placed the driver in an inescapable predicament, one that required manual intervention and even outside assistance to resolve. My ongoing coverage of the Tesla community indicates that this type of "map error" or "late decision" near complex interchanges is far from an isolated event.

Many users in the same Facebook thread were echoing similar concerns. They detail instances where FSD executes a lane change too late, misinterprets merging lanes, or fails to properly process confusing road markings, issues that directly contribute to missed or incorrect exits. This consensus points not to a single faulty unit but to a systemic problem in how FSD processes complex, real-world navigational data, which the system still relies on heavily.

Tesla's Master Plan Is Still A Work In Progress

The creation of the Model 3 was driven by four core strategic objectives laid out in Tesla's master plan:

- Mass-Market Acceleration: To accelerate the world's transition to sustainable energy by delivering a truly high-volume, affordable electric vehicle.

- Affordability and Accessibility: To produce a vehicle priced competitively for the average consumer, following the higher-priced Model S and Model X.

- Technological Integration: To serve as the foundational platform for advanced software, integrating the latest advancements in electric drivetrain and safety technology.

- Safety and Performance: To achieve sports-car-level performance and maintain a top-tier safety rating, demonstrating that electric vehicles could be both environmentally conscious and thrilling to drive.

A Dangerous Sense of Complacency

Delving further into Christopher Lewis's near-miss, the sheer panic of realizing you are trapped on a bridge approach with no feasible turnaround point cannot be overstated. The FSD system, in its current supervised state, often instills a dangerous sense of complacency in the driver.

When the system functions flawlessly for hours, the driver's nervous system becomes accustomed to passive monitoring, making the sudden need for a high-stakes, instant intervention far more difficult and stressful than if the driver had been controlling the vehicle all along. It's the paradox of automation: the more reliable it seems, the less prepared the human operator is for its inevitable failures.

This tension is reinforced by other user feedback. For example, another member of the Facebook thread, "MrJakk," noted his issues with FSD's anticipatory capability, stating: "I'll also wait until around 0.3 miles before the exit to get in the correct lane. I typically do it a mile in advance in normal traffic. Its 'forward thinking' isn't the best right now. For me, it's more of an issue than just rerouting because where I live, there are very few highways or exits to turn around, so a missed exit can add 30 minutes to an ETA at times."

This inability to plan ahead, relying on last-minute, aggressive maneuvers, is exactly what led Lewis into an irreversible course correction towards an international border.

There's More To the Story

The core issue facing Model 3 owners who have purchased or subscribed to FSD is not merely a software bug, but a fundamental misalignment between the system's branding and its operational capability. The "Full Self-Driving" moniker carries the implication of autonomy, yet the real-world experience, as evidenced by Lewis's story, is one of a complex driver-assistance package prone to errors in intricate environments.

The Model 3 was designed to democratize electric transport.

Still, the FSD component currently requires owners to serve as unpaid, high-fidelity beta testers, constantly feeding data back to Tesla through their disengagements. This is a burden that consumers purchasing a vehicle at this price point should not have to bear, especially when the consequences of failure involve customs officials and potential security risks.

From a safety and regulatory standpoint, incidents like this Model 3 near-miss reinforce the urgent need for stricter oversight from bodies like the National Highway Traffic Safety Administration (NHTSA). A simple map issue that becomes a near-border crossing is a serious malfunction that goes beyond mere inconvenience.

It highlights how FSD struggles with geopolitical boundaries and complex highway infrastructure. The technology needs to be far more robust at identifying and respecting "no-go" zones, such as toll entries or border routes, where a software error can escalate into a national security issue or a major traffic headache. For the Model 3, the promise of the future remains hampered by its current inability to reliably navigate the present's poorly designed or highly sensitive road networks.

The Story Isn't Over

Ultimately, the stories from Christopher Lewis and other Tesla owners are about managing expectations in a rapidly evolving technological landscape. Model 3 owners purchased a vehicle that embodies innovation and the promise of a self-driving future. The high cost of FSD, whether bought outright or via subscription, implies a superior level of performance that, in reality, remains elusive.

When FSD is functioning, it's revolutionary; when it fails, it's startlingly primitive. For Tesla to truly live up to the vision established by the Model 3, it must close the gap between the revolutionary brand promise and the reality of the software's performance, transforming FSD from a heavily supervised beta product into the seamless, safe experience owners were promised.

Do You Have an FSD Story?

Have you ever had a close call where Autopilot or FSD led you into an unexpected or tricky situation? Share your story in the red Add New Comment link below!

I'm Denis Flierl, a Senior Torque News Reporter since 2012, bringing over 30 years of automotive expertise to every story. My career began with a consulting role for every major car brand, followed by years as a freelance journalist, test-driving new vehicles, equipping me with a wealth of insider knowledge. I specialize in delivering the latest auto news, sharing compelling owner stories, and providing expert, up-to-date analysis to keep you fully informed.

Follow me on X @DenisFlierl, @WorldsCoolestRides, Facebook, Instagram and LinkedIn

Photo credit: Denis Flierl