Few scenarios rival the spectacle of a machine systematically rejecting its primary user based on criteria that remain as mysterious as they are maddening.

Grant Hanevold's wife has become an unwitting participant in what amounts to automotive discrimination, where her Hyundai Palisade's driver attention monitoring system has apparently decided that her very existence constitutes a safety hazard requiring constant intervention.

This isn't merely a technical glitch; it's a perfect metaphor for our increasingly complicated relationship with technology that presumes to understand human behavior better than humans understand themselves.

The setup reads like science fiction: a vehicle that performs flawlessly for one driver while treating another as an incompetent menace requiring constant supervision.

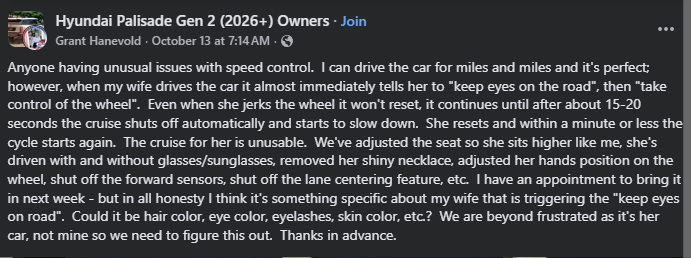

“Anyone having unusual issues with speed control. I can drive the car for miles and miles, and it's perfect; however, when my wife drives the car, it almost immediately tells her to "keep eyes on the road", then "take control of the wheel". Even when she jerks the wheel, it won't reset; it continues until, after about 15-20 seconds, the cruise shuts off automatically and starts to slow down. She resets, and within a minute or less, the cycle starts again. The cruise for her is unusable. We've adjusted the seat so she sits higher like me, she's driven with and without glasses/sunglasses, removed her shiny necklace, adjusted her hand position on the wheel, shut off the forward sensors, shut off the lane centering feature, etc. I have an appointment to bring it in next week - but in all honesty, I think it's something specific about my wife that is triggering the "keep eyes on the road". Could it be hair color, eye color, eyelashes, skin color, etc.? We are beyond frustrated as it's her car, not mine, so we need to figure this out. Thanks in advance.”

The systematic nature of the vehicle's rejection creates a feedback loop of technological harassment that transforms driving from transportation into psychological warfare. Even when she jerks the wheel, it won't reset; it continues until, after about 15-20 seconds, the cruise shuts off automatically and starts to slow down. She resets, and within a minute or less, the cycle starts again. This description captures the Kafkaesque quality of arguing with a machine that has already reached its verdict and refuses to consider appeals or evidence to the contrary.

The relentless repetition of this cycle transforms what should be a convenience feature into a source of genuine distress, where the vehicle's safety systems become the primary safety hazard. The cruise control, designed to reduce driver fatigue and improve highway safety, becomes unusable precisely when it would provide the most benefit.

This represents a fundamental failure of human-centered design, where technological sophistication creates problems that didn't exist before the technology was introduced.

The exhaustive troubleshooting efforts reveal the desperate lengths to which owners will go when confronted with inexplicable technological behavior.

We've adjusted the seat so she sits higher like me, she's driven with and without glasses/sunglasses, removed her shiny necklace, adjusted her hand position on the wheel, shut off the forward sensors, shut off the lane centering feature, etc. This litany of attempted solutions reads like an inventory of human characteristics that technology might find objectionable, transforming personal attributes into potential system incompatibilities.

The suggestion that physical characteristics like hair color, eye color, or skin tone might influence system behavior introduces disturbing questions about the training data and algorithms that govern these monitoring systems. I think it's something specific about my wife that is triggering the "keep eyes on the road". Could it be hair color, eye color, eyelashes, skin color, etc.? This speculation, while born of frustration, highlights legitimate concerns about bias in technology systems that may not have been adequately tested across diverse populations.

Jeremy Barr's response introduces the possibility that medical interventions might create compatibility issues with automotive monitoring systems, revealing another dimension of technological discrimination. Polarized sunglasses. OR! Eye surgery, specifically anything including lens replacement. The exclamation point suggests the excitement of recognition, where someone else's misfortune provides validation for a theory about technological bias against specific medical conditions or corrective devices.

This observation raises profound questions about accessibility and inclusion in automotive design, where life-saving medical procedures or common vision correction methods might render individuals incompatible with safety systems designed to protect them. The irony is palpable: technologies intended to enhance safety may systematically exclude people who have taken steps to improve their own health and vision.

Donna Jones Warren's contribution reveals the community knowledge that develops around these technological quirks, where owners share hard-won insights about the specific triggers that cause system failures.

Does she wear sunglasses? My brother-in-law had the same problem, and it was his sunglasses. My husband kept putting one hand over the top of the wheel, blocking its view. The casual mention of hand position blocking the system's view illustrates how natural human behaviors can confuse technology systems trained on idealized driving positions.

The reference to sunglasses as a potential culprit highlights the fundamental challenge of designing monitoring systems that must account for the infinite variety of human appearance and behavior. What seems like a simple task, determining whether a driver is paying attention, becomes enormously complex when confronted with the reality of diverse populations using different accessories, having various physical characteristics, and exhibiting individual driving styles.

The Anthropology of Automotive Technology

- Technology systems trained on limited datasets may systematically discriminate against individuals whose characteristics weren't adequately represented in training data.

- Monitoring systems designed around idealized driving positions and behaviors may fail when confronted with natural human variation and adaptation.

- Common medical procedures and corrective devices may create incompatibilities with safety systems, effectively excluding portions of the population from using advanced features.

- Differences in average height, seating position, and physical characteristics between demographic groups may create systematic biases in recognition systems.

Santiago Vera's technical guidance provides the kind of detailed navigation through menu systems that has become essential knowledge for modern vehicle ownership. Set up the vehicle, then driver assistance, and click on where in the diagram it says Driver Attention Warning. There you can see: Leading Vehicle Alert off, Forward attention warning on off, Inattentive driving warning on off. This step-by-step instruction manual approach to solving what should be intuitive technology illustrates how automotive advancement has created new categories of required expertise.

The complexity of these menu systems reflects the broader challenge of making sophisticated technology accessible to users who simply want to drive without becoming systems administrators for their vehicles. The fact that multiple layers of settings must be navigated to address a basic compatibility issue suggests that automotive user interface design has prioritized feature completeness over user experience.

Kelly O'Shaughnessy's reference to page 444 of the manual introduces the sobering reality that understanding modern vehicles requires academic-level study of documentation that rivals software manuals in complexity. Page 444 of the manual. With the vehicle on, select Setup > Vehicle > Driver Assistance > Driver Attention Warning > Forward Attention Warning in the infotainment system to set whether to use the function. The specificity of this citation suggests that owners have become researchers, cataloging solutions to problems that shouldn't exist in well-designed systems.

The manual excerpt reveals the paternalistic nature of these systems, where technology assumes authority over human behavior with the confidence of a system that believes it understands attention better than conscious beings. If Forward Attention Warning is enabled, the function warns the driver when the driver's gaze is not focused on the road. The clinical language masks the presumption embedded in this technology: that machines can accurately assess human mental states and intervene appropriately.

The note that "When the vehicle is restarted, Forward Attention Warning always turns on" demonstrates how these systems prioritize their own operation over user preferences, resetting to default behaviors that may have already proven problematic. This represents a fundamental philosophical disagreement about who controls the vehicle, where manufacturers assume that their programming knows better than the individual user experience.

The Philosophy of Human-Machine Authority:

- Safety systems that override user preferences based on algorithmic assessments of appropriate behavior, regardless of individual circumstances or needs.

- Systems that reset to manufacturer preferences rather than learning from user adaptations and customizations.

- Technology systems that claim to understand human mental states better than humans understand themselves, leading to conflicts between machine judgment and human experience.

- Universal design approaches that fail to account for human diversity create systematic exclusion of individuals who don't match algorithmic expectations.

The broader implications of this driver attention monitoring failure extend beyond individual frustration to reveal fundamental challenges in the development of technology for automotive applications. The assumption that attention can be reliably assessed through visual monitoring ignores the complexity of human cognition and the variety of ways that different individuals maintain situational awareness.

The gender dynamics embedded in this particular case, where the male owner experiences no problems while the female primary user cannot operate the system, highlight potential biases in automotive technology development that may reflect the demographics of engineering teams and test populations. If safety systems systematically fail for specific demographic groups, they become safety hazards rather than safety enhancements.

The community response to this problem demonstrates the collaborative troubleshooting approach that has become essential for navigating complex automotive technology. Owners share experiences and solutions that supplement official documentation, creating informal support networks that help individuals adapt to systems that weren't designed with their specific characteristics in mind.

The evolution of automotive safety technology must account for human diversity rather than assuming universal compatibility with idealized user profiles. The failure of driver attention monitoring for specific individuals reveals gaps in development processes that may exclude significant portions of the driving population from accessing advanced safety features.

The psychological impact of being repeatedly rejected by your own vehicle cannot be understated, where technology designed to enhance confidence and safety instead creates anxiety and self-doubt. The wife in this scenario faces the choice between accepting technological harassment or abandoning features she has paid for, neither of which represents acceptable outcomes for modern automotive design.

The financial implications extend beyond the immediate frustration to questions about value and accessibility, where premium features become unusable for specific users due to algorithmic bias or inadequate testing.

This creates a form of technological redlining, where certain individuals cannot access the full functionality of products they have purchased due to characteristics beyond their control.

The ongoing development of driver monitoring technology must prioritize inclusive design that accounts for human diversity rather than assuming compatibility with narrow demographic profiles. The success of these systems depends not on their sophistication but on their ability to work reliably for all users regardless of individual characteristics.

The resolution of this particular case will likely require manufacturer intervention and software updates that address the underlying bias in attention assessment algorithms. However, the broader challenge of creating technology that serves human diversity rather than enforcing algorithmic conformity will require fundamental changes in development approaches and testing methodologies.

The irony that safety systems can become safety hazards when they systematically fail for specific users illustrates the complex challenges of implementing technology in safety-critical applications. The goal of reducing human error through technological intervention becomes counterproductive when the technology itself introduces new categories of error and exclusion.

The experience of the Palisade owner in this story is a frustrating reminder that even the most advanced vehicles can suffer from inexplicable issues. The shaking issue in the 2026 Palisade Calligraphy is another example of how complex modern vehicles can be, and how difficult it can be for dealerships to diagnose and fix problems that are not easily reproducible.

Do you believe that driver attention monitoring represents genuine safety advancement or technological overreach, and what role should user customization play in adapting these systems to individual needs and characteristics?

Share your thoughts on the balance between automated safety systems and human autonomy in the comments below.

Noah Washington is an automotive journalist based in Atlanta, Georgia. He enjoys covering the latest news in the automotive industry and conducting reviews on the latest cars. He has been in the automotive industry since 15 years old and has been featured in prominent automotive news sites. You can reach him on X and LinkedIn for tips and to follow his automotive coverage.