There is a particular unease that comes from technology working almost perfectly, just long enough for you to trust it. A recent post in the Tesla CyberTruck group captures that discomfort in vivid detail, describing a pattern that feels less like a bug and more like a personality shift. One owner reports that Full Self-Driving performs flawlessly for 100 miles or more, only to become erratic and frankly frightening after a stop, even when conditions and routes are identical.

The account is careful and specific. Same roads. Same route. No camera obstructions. No weather changes. Yet after stopping for a period and resuming the trip, the Cybertruck begins to wander. Crossing yellow and white lines. Drifting too close to other vehicles. Steering inputs that look nervous rather than deliberate. What makes it more unsettling is that none of the usual fixes work. Forced resets. Camera recalibration. Power cycling. Nothing restores normal behavior in the moment. Only a full overnight rest in the garage brings the system back to its composed, confident self the next morning.

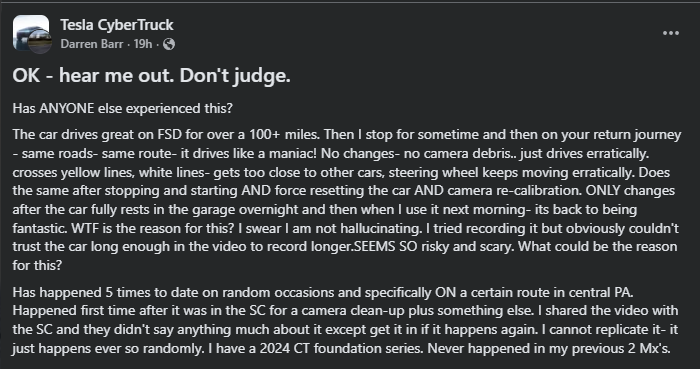

“OK - hear me out. Don't judge.

Has anyone else experienced this?

The car drives great on FSD for over a 100+ miles. Then I stop for some time, and then on your return journey, same roads, same route, it drives like a maniac! No changes, no camera debris.. just drives erratically. crosses yellow lines, white lines, gets too close to other cars, steering wheel keeps moving erratically. Does the same after stopping and starting, and force resetting the car and camera recalibration. ONLY changes after the car fully rests in the garage overnight, and then when I use it the next morning, it's back to being fantastic. WTF is the reason for this? I swear I am not hallucinating. I tried recording it, but obviously couldn't trust the car long enough in the video to record longer. seems so risky and scary. What could be the reason for this?

Has happened 5 times to date on random occasions and specifically ON a certain route in central PA. Happened first time after it was in the SC for a camera clean-up plus something else. I shared the video with the SC, and they didn't say anything much about it except get it in if it happens again. I cannot replicate it- it just happens ever so randomly. I have a 2024 CT foundation series. Never happened in my previous 2 Mx's.”

That detail matters. This does not read like random driver paranoia or one-off misbehavior. The owner reports the issue occurring five separate times, often on the same route in central Pennsylvania, and only after the vehicle has been driven successfully for long stretches. The contrast is stark enough that the driver no longer feels safe leaving FSD engaged long enough to even record extended video evidence. Trust evaporates quickly when the steering wheel starts acting like it has opinions of its own.

Tesla Cybertruck: Radical Design

- Stainless steel exterior panels remove the need for paint, reducing cosmetic wear concerns while introducing challenges related to glare, fingerprints, and body repair techniques.

- Steer-by-wire and rear-wheel steering enable tight maneuvering for a large vehicle, though the steering response differs markedly from conventional pickup tuning.

- Cabin design minimizes physical controls in favor of touchscreen-based operation, simplifying the dashboard but increasing reliance on software for basic functions.

- The powered tonneau cover improves cargo security and aerodynamics, but adds weight and limits flexibility compared with simpler bed-cover designs.

Other owners quickly chimed in, and the responses suggest this is not an isolated phenomenon. One notes that a few software updates ago, FSD felt dialed in, but recent behavior includes speed mismanagement, odd lane choices, and inefficient routing. Another mentions unrelated but concerning issues stacking up, from charging failures to an air compressor that seems to run more often than it should. Individually, these may sound minor. Together, they paint a picture of systems that are not always aging gracefully over the course of a drive.

Several commenters zeroed in on navigation and GPS as a possible culprit for the Cybertrucks issues. If positional data begins to drift or conflict with camera inputs after extended runtime, the car may start making decisions based on slightly corrupted context. That could explain why the same physical road suddenly looks unfamiliar to the system after a stop, even though it behaved perfectly hours earlier. Unlike cameras, GPS faults are invisible. You cannot wipe them clean with a cloth.

What makes this especially interesting is that the problem appears route-specific. That hints at map data quirks, local signal interference, or edge-case geometry that only trips the system once enough state has accumulated. Modern driver assistance is not just reacting to what it sees. It is constantly layering perception, prediction, and navigation into a single model. When one of those layers degrades, the behavior can look chaotic even if no single sensor has outright failed.

The service center response, or lack of one, adds to the frustration. Being told to “bring it in if it happens again” is not particularly helpful when the issue cannot be reliably replicated on demand and seems to resolve itself after a full power-down cycle. From the owner’s perspective, that feels like being asked to gamble with safety in order to collect better data.

There is an uncomfortable truth embedded here. Full Self-Driving, especially in a vehicle as new and unconventional as the Cybertruck, is still highly state-dependent. It can behave brilliantly when everything aligns, and then degrade in ways that are difficult for drivers to predict or diagnose. That unpredictability is far more unsettling than consistent mediocrity. At least with the latter, you know when to intervene.

What separates this post from routine FSD complaints is the clarity of the pattern. Excellent behavior. A stop. A restart. Then chaos. Fixed only by time and rest. That suggests something cumulative, whether thermal, computational, or data-related, that the system does not fully reset until the vehicle truly powers down.

For now, there is no clean answer. Camera recalibration may help some. Service centers may uncover GPS or hardware anomalies for others. But the underlying issue remains troubling. When a system earns your trust for hours and then abruptly violates it without warning, the emotional whiplash is severe. As one owner put it, it feels risky and scary, not because FSD is always bad, but because it is sometimes brilliant and sometimes not, with no obvious signal telling you which version you are about to get.

That may be the most important takeaway. Until these transitions become more transparent or predictable, drivers will continue to hover over the controls, wondering not whether the system can drive, but whether it will decide to forget how.

Image Sources: Tesla Media Center

Noah Washington is an automotive journalist based in Atlanta, Georgia. He enjoys covering the latest news in the automotive industry and conducting reviews on the latest cars. He has been in the automotive industry since 15 years old and has been featured in prominent automotive news sites. You can reach him on X and LinkedIn for tips and to follow his automotive coverage.

Set Torque News as Preferred Source on Google

Comments

By this late date, anyone…

Permalink

By this late date, anyone who relies on FSD is likely a couple standard deviations to the left on the IQ curve. But if they're having fun, then more power to them.