For years, Elon Musk has promised that a Tesla "Robotaxi" would eventually be a "money-printing machine" for its owners, a vehicle so safe and capable that steering wheels would become vestigial organs of a bygone era. But this week, the data finally caught up with the hype, and the picture it paints isn't just unflattering—it’s alarming.

New data released from the National Highway Traffic Safety Administration (NHTSA) regarding Tesla’s test fleet in Austin, Texas, shows a crash rate significantly higher than that of human-driven counterparts over the last month. While the "Cybercab" was supposed to be the herald of a new era of autonomy, it has instead reignited a firestorm of regulatory scrutiny and public skepticism.

The Data: A Stark Departure from Safety Claims

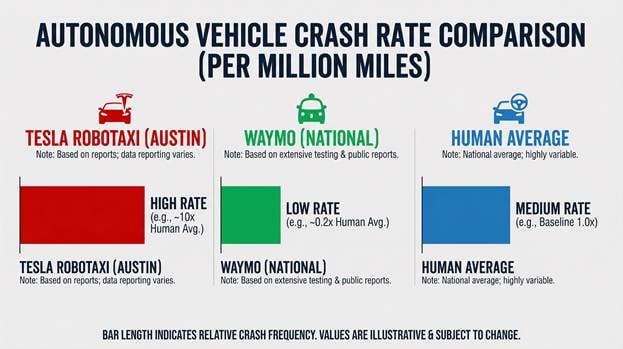

The numbers are difficult to ignore. According to the latest update to NHTSA's Standing General Order (SGO) incident report database, Tesla’s Robotaxi fleet in Austin recorded five new crashes in the last month alone, bringing the total to 14 incidents since the pilot launched eight months ago.

When you crunch the mileage reported in Tesla's Q4 2025 earnings, the math becomes grim. The fleet is currently experiencing roughly one crash every 57,000 miles. For comparison, the average human driver in the United States experiences a police-reported crash roughly once every 500,000 miles. By these benchmarks, Tesla's Robotaxi fleet is crashing roughly 8 to 9 times more frequently than humans.

Even more concerning is that these crashes occurred while a "trained safety monitor" was in the vehicle. This means the system is failing even with a human safety net—raising the terrifying question of what would happen if that monitor were removed, a step Tesla began taking in limited "unsupervised" tests in late January 2026.

A Cloud Over Autonomy: The Trust Deficit

This performance is creating a thick cloud of doubt over the entire autonomous driving industry. When the most recognizable name in electric vehicles fails this publicly, it doesn't just hurt Tesla; it poisons the well for the technology as a whole.

To remove this cloud, three things are needed:

- Radical Transparency: Tesla continues to redact the narrative section of its crash reports, citing "confidential business information." To win back public trust, the company must stop hiding the "how" and "why" of these failures.

- Standardized Safety Metrics: We need a federal framework that defines what "safe" actually looks like. Currently, Tesla uses its own internal metrics to claim FSD is "safer than a human," while third-party data often suggests the opposite.

- Third-Party Validation: We can no longer rely on a company's own marketing department to grade its homework. Independent safety audits must become the industry standard.

The Boy Who Cried "Full Self-Driving"

Tesla has a long history of overpromising and under-delivering, a strategy that is now actively damaging the brand. From the 2016 claim that a Tesla would drive itself across the country by 2017, to the repeated "end of the year" promises for Level 5 autonomy, the fatigue is setting in.

Brand loyalty is a finite resource. When customers pay upwards of $15,000 for a "Full Self-Driving" suite that requires constant supervision and—according to the Austin data—is statistically more dangerous than their own driving, they don't just feel disappointed; they feel misled. This has already led to regulatory probes into whether Tesla's marketing is "unambiguously false".

Impact on Valuation and Image

Tesla is no longer valued solely as a car company; it is valued as an AI and robotics company. If the "AI" part of that equation—the FSD software—is perceived as a failure, the valuation floor drops.

Markets have already begun to react, with TSLA shares sliding as news of the 14 Austin crashes circulated. If the Austin data persists, Wall Street will likely stop viewing FSD as a "flywheel" for growth and start viewing it as a massive liability. A system crashing every 57,000 miles cannot support a trillion-dollar market cap indefinitely.

The Leaders in the Clubhouse

While Tesla struggles with its vision-only approach, other companies are proving that autonomy is possible when you don't cut corners on hardware.

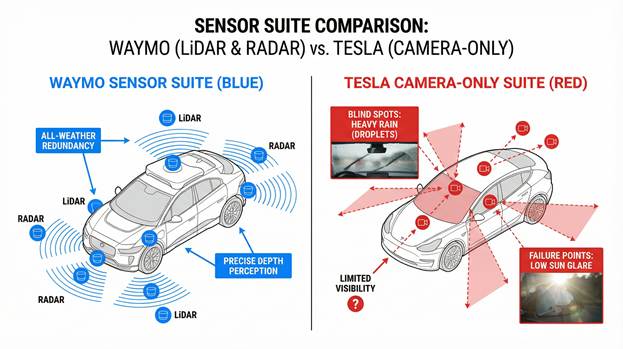

- Waymo (Alphabet): With over 125 million fully driverless miles, Waymo reports a crash rate significantly lower than the human average. Their use of LiDAR and Radar provides the redundancy that Tesla's cameras-only system lacks.

- Zoox (Amazon): By building a ground-up robotaxi without a steering wheel and testing in dense urban environments, Zoox is prioritizing a purpose-built safety architecture.

- Cruise (GM): Despite previous setbacks, Cruise has returned to testing with a heavy focus on "safety-first" metrics and transparency with regulators.

How Tesla Digs Out

To dig itself out of this hole, Tesla must execute a "Safety First" pivot. This means:

- Admitting the Limits of Vision-Only: It may be time to reintroduce Radar to the Robotaxi fleet to handle the "edge cases" (like Texas thunderstorms) that currently baffle the software.

- Stopping the Beta Testing on Public Roads: The "move fast and break things" ethos is great for social media apps, but it’s deadly for two-ton machines.

- Rebranding FSD: Moving toward "Supervised Autonomy" as the permanent brand name—rather than a temporary disclaimer—would align marketing with reality.

Wrapping Up

The data from Austin is a wake-up call for Tesla and the autonomous vehicle industry. A crash rate nearly nine times higher than humans is not a "growing pain"—it is a fundamental system failure. If Tesla wants to remain the leader in the EV space, it must stop treating safety as a marketing hurdle and start treating it as an engineering requirement. Until the "Cybercab" can prove it is safer than a teenager with a learner's permit, the dream of a Tesla-powered autonomous future will remain stuck in the garage.

Disclosure: Images rendered by Artlist.io

Rob Enderle is a technology analyst at Torque News who covers automotive technology and battery developments. You can learn more about Rob on Wikipedia and follow his articles on TechNewsWord, TGDaily, and TechSpective.

Set as google preferred source