Self-driving cars may need to be electric, but electric cars don’t need to drive themselves.

Last week, we took a look at why an electric car fan and expert can love Tesla, yet not trust them. From reliability concerns to bet-the-company crap-shoots on automated manufacturing technology that proved a failure time and again in the auto industry, the company diminished its brand name among a lot of consumers by nearly failing every time it launched a new model and leaving its customers holding the risk of cars that might not work seamlessly if the company’s future bets didn’t pay off.

Those risk pale in comparison to the risks of promising customers that ordinary cars can drive themselves, as a series of accidents points out.

In the latest development, Israel (not insignificantly the birthplace of Tesla’s first generation self-driving system) banned Tesla owners from using the feature inside its borders. The country’s Ministry of Transportation ordered the company to remind its drivers that it is illegal to turn on the self-driving Navigate on Autopilot system in their Teslas, because self-driving systems haven’t been approved for use on Israel’s roads.

Last year, Tesla made the basic safety functions of its Autopilot self-driving system—so called Level 2 driver assist features such as automatic emergency braking and basic lane-keeping assistance—standard and set them to turn on by default. Almost every other luxury car and a lot of modern non-luxury models have similar features.

Where Tesla differs is in two extra features. The first is Enhanced Summon, which allows owners to summon the car remotely in a parking lot and have it drive to them autonomously. Enhanced Summon only works in private parking lots so may not fall under the authority of government ministries. The other is Navigate on Autopilot, which allows the car to automatically follow a route entered into the GPS system, changing lanes and taking exit ramps as needed, with or without driver permission.

Tesla CEO Elon Musk claims that robot driving will be three to four times safer than human drivers. He has said he bases the numbers on U.S. federal crash statistics but has never publicized how he or Tesla arrived at the multiple, and the NHTSA which gathered the statistics Musk claims the numbers are based on denies their veracity.

In a wide-ranging speech last April at an investor conference that Tesla dubbed “Autonomy Day,” Musk announced that he was staking the company’s future on self-driving cars by building a Tesla Network in which all owners of the company’s Model 3s would be able to rent our their cars to give rides to others autonomously. “You’d be stupid not to buy a Model 3,” he said. He went so far as to announce that the company would stop offering open-ended leases on Model 3s, instead opting to retake ownership of all off-lease Model 3s to add to the Network on Tesla’s own behalf.

At the same conference, Musk and Tesla engineers extolled the virtues of Tesla’s autonomous driving technology, which relies on software, cameras, and forward-looking radar, and dismissed—even ridiculed--efforts by other automakers and their efforts to add expensive-but-accurate Lidar sensors to their systems.

But there’s a fly in Musk’s ointment: Despite Musk’s assertions that the company’s software is superior because of its vast collection of real-world traffic data (collected in the background from the vast majority of Teslas on the road every time the cars hit the streets), the spate of often-fatal crashes from Teslas slamming into the back of stationary objects at high speeds has continued unabated.

Electronic Sensor Problem

The problem comes down to sensors. Unlike a human driver, electronic sensors—especially cameras and radar—can only “see” a few hundred feet down the road and can only process information so fast. (Musk announced that Tesla would introduce a new dedicated Autopilot computer chip to accelerate the processing, and the company started rolling out the chip on new cars last year, and offers it as an upgrade on older models, but most Teslas still don’t have it.)

NHTSA Investigation

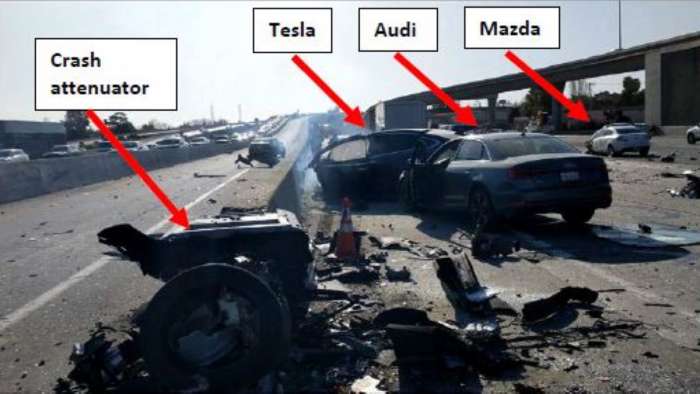

The National Transportation Safety Board is meeting next month to rule on the probable cause of a fatal 2018 crash in Mountain View, California, which killed 38-year-old Walter Huang, who was relying on Autopilot when his Model X crashed into a concrete highway barrier. In its investigation of a 2018 crash in which a Tesla driving on Autopilot hit the back of a police car stopped in the road, the NTSB found that Autopilot “permitted the driver to disengage from the driving task.” The cased mirrored an earlier 2018 crash in Utah in which a Tesla driving on Autopilot hit the back of a fire truck stopped in the road. On December 29, another Tesla hit the back of a fire truck in the passing lane of Interstate 70 in Cloverdale, Indiana, though authorities have not announced whether that Tesla was driving on Autopilot.

The National Highway Traffic Safety Administration, which has the power to regulate vehicle safety systems, has opened an investigation into vehicle crashes in which cars were using self-driving systems. Of the 23 crashes in the investigation, 14 involve Tesla’s Autopilot.

Tesla Warns Drivers

Along with experts and other automakers, Tesla warns drivers that automakers that they have to keep their hands on the wheel and their attention on the road, but as these accidents show, too many Tesla drivers are finding ways around the safeguards.

Testing by Consumer Reports also revealed that Autopilot does a poor job of staying in its lane and making steady driving choices that are predictable to other drivers, calling it “far less competent than a human driver.”

Experts say Tesla is taking the wrong approach. Many, if not most, automotive technical innovations trickle down from the aviation industry, and autopilot systems are no exception. One pilot who has become an authority on safety systems, says Tesla is taking a backwards approach to Autopilot. Captain Chesley Sullenberger, the pilot who successfully crash landed a U.S. Air 757 in the Hudson River in 2012, saving all 150 passengers and five crew members on board. The airline industry acknowledges that humans are bad at consistent repetition of monotonous tasks, and cannot be relied upon to intervene on sudden notice after mentally disengaging. But they’re good at making complex assessments and devising creative solutions in a pinch. Thus, aircraft autopilot systems are designed to rely primarily on the pilot and step in to assist and provides alerts when they detect mistakes. They’re not designed to take over and expect the pilot to intervene—an approach which has been blamed for recent crashes of two 737 Max airplanes as well as a catastrophic crash of Airbus A330 over the Atlantic in 2009. (See A Fighter Pilot’s View On Tesla Autopilot.)

That’s the approach other automakers are taking, using the same types of sensors and software that Tesla uses as driver safety aids, but never letting them take over from the driver.

To stake the future of a company that many are counting on to lay out the template for a clean transportation future on such a flawed system based on a flawed approach and erodes trust in a company that many in the public are counting on to make clean and efficient electric cars more attractive to buyers to displace the world’s insatiable demand for oil. Such distractions are disappointing for those paying attention to what looks like a coming green revolution.

Tesla, truly, we want to believe. But this kind of hubris makes it hard.

Eric Evarts has been bringing topical insight to readers on energy, the environment, technology, transportation, business, and consumer affairs for 25 years. He has spent most of that time in bustling newsrooms at The Christian Science Monitor and Consumer Reports, but his articles have appeared widely at outlets such as the journal Nature Outlook, Cars.com, US News & World Report, AAA, and TheWirecutter.com and Alternet. He can tell readers how to get the best deal and avoid buying a lemon, whether it’s a used car or a bad mortgage. Along the way, he has driven more than 1,500 new cars of all types, but the most interesting ones are those that promise to reduce national dependence on oil, and those that improve the environment. At least compared to some old jalopy they might replace. Please, follow Evarts on Twitter, Facebook and Linkedin. You can find most of Eric's stories at Torque News Tesla coverage. Search Tesla Torque News for daily Tesla news reporting from our expert automotive news reporters.

Comments

Tesla has an outstanding

Permalink

Tesla has an outstanding safety record, and stories to the contrary are primarily serving Tesla stock short sellers. I personally helped develop automobile tracking systems, and the number one issue that the average person does not understand is that there are absolutely ZERO electronic safety systems that operate 100% perfectly, all the time. Yet every single Tesla accident in the world is instantly published across the internet, completely ignoring the fact that about 90 people die every day in auto accidents in the U.S. and pretty much every single one of those accidents and deaths are caused by humans driving their own vehicles. In comparison to those numbers here are Autopilot's numbers: Tesla’s Vehicle Safety Report for Q4 2019 revealed that a Tesla on Autopilot was involved in one accident for every 3.07 million miles driven. For those without Autopilot but use the active safety features of the vehicle, there was one accident per 2.10 million miles driven. Tesla owners who do not use Autopilot and other active safety features were involved in one accident for every 1.64 million miles driven. Overall, these numbers are far better than what’s been recorded by the National Highway Traffic Safety Administration (NHTSA) which indicates there being one automobile crash in the United States every 479,000 miles. So I would be very happy to take those odds and trust Tesla's safety systems to watch and protect me against all of those impatient, intoxicated, and inattentive human drivers out there. Do I expect that it will operate without any errors, ever? Certainly not. But with the mess of dangerous human drivers out there that I experience in my daily commute, I would be very happy to trust an advanced computer safety system watch by back, and front, and sides, every second of the drive.

To my knowledge there have

Permalink

To my knowledge there have only been a handful of crashes confirmed to have occurred with Autopilot (Ap) engaged. This despite the fact that there are nearly 900 THOUSAND Tesla vehicles, many regularly using Ap, on the world's roads. Even then, there is nothing to say that drivers have not intervened to actually cause the collision even when Ap was active. Or, as in the recent video uploaded by some complete buffoon clearly demonstrates, Ap was engaged by the driver in an utterly inappropriate situation causing the car to aquaplane and crash.

Your headline and the article in general is just yet more click-bait clap-trap. Please stop publishing them!

"Ap was engaged by the driver

Permalink

"Ap was engaged by the driver in an utterly inappropriate situation"... And that's really the crux of the problem, is that it can by engaged when the driver is paying no attention at all. And the only guidance Tesla provides beyond the legally mandated disclaimer screen is naming it "Autopilot," "Navigate on Autopilot," and, best of all, "Full Self-Driving Mode." !

Like I said, the biggest flaw

Permalink

Like I said, the biggest flaw is that a few people either assume that safety systems have the power of god to repeal the laws of physics and act perfectly in all crisis conditions, or a few others think that automated safety systems are the work of the devil and cannot be trusted at all. I find it amusing that you still blame Tesla for not refusing to turn on Autopilot when the car was driving too fast for those poor road conditions. This was 100% driver error. And I bet the driver would have done the same thing (crashed his car) using cruise control and using his knees to steer to get his YouTube-high speed night driving in the rain shot. All safety systems strive to make normal driving safer, but for extreme stupidity it just cushions the blow.