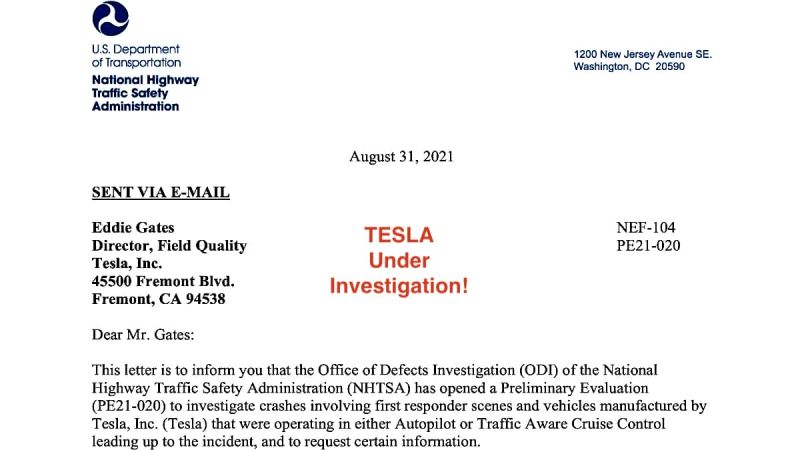

Official Notification to Tesla

Just this evening, news broke out with the release of a copy of a letter sent by e-mail to Ed Gates---Tesla’s Director of Field Quality---informing him that “…the Office of Defects Investigation (ODI) of the National Highway Traffic Safety Administration (NHTSA) has opened a Preliminary Evaluation (PE21-020) to investigate crashes involving first responder scenes and vehicles manufactured by Tesla, Inc. (Tesla) that were operating in either Autopilot or Traffic Aware Cruise Control leading up to the incident, and to request certain information.”

This letter is the latest step taken by the NHTSA after it was revealed last month that federal vehicle safety regulators are launching a formal investigation into Tesla and its Autopilot system based on a series of fatal crashes that have left at least 17 people injured and 1 dead. More to the point, of especial concern are 12 crashes (to date) associating Tesla vehicles under Autopilot control in accidents during encounters with emergency vehicles.

Here is a video news clip from last August about the Tesla Autopilot probe:

What the NHTSA Wants and Why

According to the breaking news stories, the NHTSA wants the requested information from Tesla so as to determine whether Tesla’s Autopilot caused or contributed to crashes with first responder vehicles.

The concern is that because the crashes took place after dark and involved crash or construction scenes with flashing emergency lights, that the Autopilot system sensors and software may have difficulties in correctly interpreting particular driving situations and thereby could be putting the public at risk.

In just one small section of the letter, the NHTSA wants info that describes…Tesla’s strategies for detecting and responding to the presence of first responder / law enforcement vehicles and incident scene management tactics whether in or out of the roadway during subject system operation in the subject vehicles. To Include:

1. Incident scene detection (particularly flashing lights, road flares, cones / barrels, reflectorized vests on personnel, vehicles parked at an angle “fend-off” position”).

2. Explain the effects of low light conditions on these strategies.

3. List subject system behaviors (e.g., driver warnings, control interventions).

However, the above is just a small amount of what the NHTSA wants.

According to CNBC News, Phil Koopman, professor of electrical and computer engineering at Carnegie Mellon University, has characterized NHTSA’s data request as “really sweeping” due to that the request includes Tesla’s entire Autopilot-equipped fleet, encompassing cars, software and hardware Tesla sold from 2014 to 2021.

“This is an incredibly detailed request for huge amounts of data. But it is exactly the type of information that would be needed to dig in to whether Tesla vehicles are acceptably safe,” stated professor Koopman.

In fact, in the letter of notification, a single paragraph consisting of 357 words lists practically every imaginable form of communication known to man short of Sanskrit scratched into a clay tablet is requested by the NHTSA from Tesla associated with or linked to Autopilot and its development. It is that extensive.

What This Could Mean for Tesla

News sources report that the NHTSA has the authority to mandate a recall if it determines---not just whether the Autopilot system is unsafe---but if any of the models, systems, or components within Tesla’s vehicles possess a single safety defect.

If this is true, then Tesla could be in trouble. In the past there have been noted problems with Autopilot that include a headlight high beam issue; and possible Autopilot confusion with vehicles off to the side of the road partially obstructing a lane.

However, based on the scope of the requested material, Tesla might not be physically able to provide everything requested---it’s just that massive. Plus, of course, there is the lawyer factor which is sure to delay the investigation for years, let alone months to come. The October 22nd deadline is likely just a formality in reality.

A copy of the letter can be found online from the NHTSA to Tesla here.

We Would like to Hear Your Opinion

Take a look at the letter and let us know what you think in the comments section below about the level of requests made by the NHTSA.

Timothy Boyer is Torque News automotive reporter based in Cincinnati. Experienced with early car restorations, he regularly restores older vehicles with engine modifications for improved performance. Follow Tim on Twitter at @TimBoyerWrites for daily automotive-related news.

Set as google preferred source

Comments

It is NOT following up on "a

Permalink

It is NOT following up on "a series of fatal crashes" when there was only one fatality. Even though the news and NHTSA is not interested, the larger question is how many accidents were there between first responders and ALL other vehicles of the same 7 year period? But that answer doesn't get clicks and big headlines, does it? With the terrible fires and Covid health issues both in 2020 and this year, there are MANY more emergency vehicles parked out in the middle of public roadways, often at night. Tesla's Autopilot and FSD software is dramatically changed and improved over the last 5-10 years. So it would be pointless to try and assign blame to 7 year old software than has been long replaced. If it really was even a problem after all, compared to the hundreds of similar unassisted accidents every year. No doubt Tesla will provide the NHTSA with all of the data that they require, even though it's largely a waste of resources on both sides. Tesla did need to improve their Autopilot software steadily over the past decade, which is why its current safety numbers are so good.

Hmmmm, well from the NHTSA

Permalink

Hmmmm, well from the NHTSA there are reportedly 3 deaths linked to Autopilot, and from Reuters on June 18, 2021 there is an article "U.S. safety agency probes 10 Tesla crash deaths since 2016" ( https://www.reuters.com/business/autos-transportation/us-safety-agency-says-it-has-opened-probes-into-10-tesla-crash-deaths-since-2016-2021-06-17/) that indicates the actual numbers are higher and under investigation by other agencies. I think that this qualifies using the word "series"; however, the point of the article was really about whether the NHTSA was being overly heavy-handed with a demand for so much data. You have to consider that the NHSTA essentially wants everything even remotely related to Autopilot per their letter. The amount of data must be mind-boggling when you really stop to think about it. The logistics and manpower required to put it all together with the supervision and approval of Tesla lawyers involved will likely take significantly longer than the deadline date---although, they can ask for an extension. I am not a diehard fan of Autopilot, but I am excited about the related technology since its days in DARPA years before Tesla, and posit that the NHTSA'a approach is not the answer to the problem of FSD. IMHO people on both sides of the issue need to apply some critical thinking skills to this rather than choose sides based on emotion and all of the frailties that come with being human.

BTW, I've worked years as a First Responder and no one wants another added risk to being hit by a vehicle while working in traffic just because someone else feels they have the privileged right to drive however they choose.

I think that it's important

Permalink

I think that it's important to note that Autopilot is always supposed to be assisting an active and aware driver. So every accident using Autopilot so far "should" have been avoided by the human driver, and was not. With that said, Tesla should share the responsibility for the accidents because they do actively imply that the cars will drive themselves. It's called Autopilot after all, and being human most people take things at their face value, especially with Tesla having promised Full Self Driving for many years. I think that Tesla should have used and enforced face and eye tracking from the beginning to make sure that drivers using Autopilot paid full attention to the road and traffic. Where this would not have been an issue, and those rare accidents probably would never have occurred. I do however see Autopilot and FSD as being vital to the progress of future safe driving, along with other vendors of safe drivers assist software. There will still (no doubt) be a tiny handful of accidents and even deaths in vehicles despite the best efforts of programmers to try for 100% accident-free driving. But I think that the greater long term goal of having evolved FSD products will be well worth it in the long run, being able to lower the accidents and deaths due to driving, compared to today's human piloted levels with rampant impatience, texting, and substance abuse.

Yep, I agree. And that's the

Permalink

In reply to I think that it's important by DeanMcManis (not verified)

Yep, I agree. And that's the real problem behind the development of FSD---there will always be people who will ruin a good idea by doing stupid things like sit from the backseat rather than behind the wheel; take a nap while on Autopilot or watch streaming videos; or, find ways to defeat safety features as a stunt to post on YouTube. Tesla should be held accountable for its marketing, but so should drivers who demonstrate willful negligence on the highways. Why should we have to wait until said drivers kill someone before regulations are passed concerning assisted driving of any type?! It's enough to make my head hurt, but that's the world we live in today.