For years, the promise of full autonomy has been stalled by a fundamental limitation in artificial intelligence: the "black box" problem. We have built machines that are exceptional at detecting objects, but remarkably poor at understanding context. At CES 2026, NVIDIA CEO Jensen Huang signaled the end of that era. Standing before a packed house, Huang unveiled Alpamayo, a Vision-Language-Action (VLA) model designed to transition autonomous vehicles (AVs) from simple pattern matching to genuine reasoning.

Paired with the new Vera Rubin automotive chip—the successor to the NVIDIA DRIVE Thor—this system doesn't just drive; it thinks. For the automotive industry, this represents the most significant shift since the introduction of the first neural networks for lane keeping.

From Bounding Boxes to Semantic Reasoning

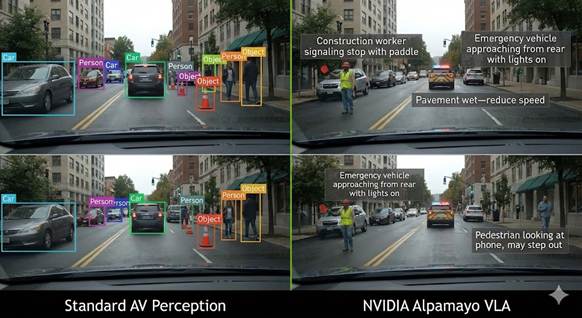

Historically, autonomous driving has relied on "perception stacks." A car’s cameras and LiDAR see a shape, draw a box around it, and label it "Pedestrian" or "Vehicle." The car then follows a set of probabilistic rules to avoid those boxes. However, this method lacks "common sense." If a box labeled "Traffic Cone" appears in an unusual place, the car often freezes because it doesn't understand why the cone is there or what the human intent behind it might be.

Alpamayo changes this by utilizing a VLA architecture. Similar to how Large Language Models (LLMs) understand the relationship between words, Alpamayo understands the relationship between visual elements and physical actions. It doesn't just see a "box"; it sees a "child chasing a ball into the street" or a "police officer gesturing for traffic to merge left." This ability to reason allows the vehicle to handle "edge cases"—those rare, complex scenarios that have historically led to autonomous vehicle recalls and safety stand-downs.

Eliminating the "Fire Truck" Problem

One of the most persistent issues for autonomous taxi services like Waymo and the now-restructured Cruise has been their tendency to obstruct emergency vehicles. In several high-profile incidents, robotaxis have driven into active fire scenes or blocked ambulances because their sensors were overwhelmed by flashing lights and their logic didn't include "stop for a fire hose."

Because Alpamayo is a reasoning model, it can interpret the semantic meaning of an emergency scene. It understands that a fire truck parked at an angle with its lights on isn't just a "stationary obstacle," but the center of a high-priority safety perimeter. Instead of attempting to navigate around it based on lane lines, the Alpamayo-powered vehicle can reason that it should pull over immediately or follow the specific hand signals of a first responder. This move from "detection" to "cognition" could effectively eliminate the unpredictability that has plagued urban robotaxi deployments.

Vera Rubin: The Silicon Foundation for Trust

To run a model as complex as Alpamayo in real-time, the hardware must be gargantuan. The Vera Rubin chip, named after the pioneering astronomer, provides the massive throughput required for these multi-modal transformers. But the Rubin chip offers more than just raw horsepower; it enables "Explainable AI."

The greatest barrier to public trust in AVs is the feeling of powerlessness. When a human driver swerves, we can ask them why. When a robotaxi swerves, the passenger is left in the dark, often leading to anxiety or a loss of confidence in the platform. NVIDIA’s new architecture allows the car to translate its driving decisions into natural language in real-time.

Imagine sitting in the back of a Vera Rubin-equipped taxi. The car suddenly moves to the far left of the lane. Simultaneously, a voice or a screen display explains: "Moving left to provide extra space for the cyclist ahead who is navigating a pothole." By providing the "why" behind the "what," NVIDIA is turning the black box into a glass box. Transparency is the foundation of trust, and this feature alone could do more for AV adoption than a thousand miles of accident-free driving.

The Competitive Landscape: AMD and Qualcomm Close In

While NVIDIA remains the clear leader in the high-end autonomous stack, it is no longer the only game in town. The "NVIDIA tax"—the high cost and power consumption of their hardware—has opened the door for challengers.

AMD’s recent push into automotive, particularly with their Versal AI Edge Series and Ryzen-based cockpit solutions, offers a compelling alternative for manufacturers who want high performance with better energy efficiency. Simultaneously, Qualcomm’s Snapdragon Ride platform has become the darling of traditional OEMs like GM and BMW, who value Qualcomm’s expertise in integrated connectivity and power management.

NVIDIA is countering this by moving up the value chain. While AMD and Qualcomm are winning on "Digital Cockpit" experiences, NVIDIA is doubling down on the "AI Brain." By integrating Alpamayo and Vera Rubin, NVIDIA is betting that car makers will pay a premium for a system that isn't just "smart," but "wise."

Redefining the Software-Defined Vehicle

This shift toward VLA models like Alpamayo also redefines the Software-Defined Vehicle (SDV). In the past, an SDV meant a car that could get over-the-air updates for its maps or entertainment system. With Alpamayo, the SDV becomes an evolving entity that learns the nuances of different regions.

The way people drive in Paris is different from how they drive in Phoenix. A reasoning model can be "fine-tuned" for regional driving cultures without requiring a complete rewrite of the underlying code. This flexibility ensures that NVIDIA-powered fleets can scale globally far faster than systems relying on rigid, localized rule-sets.

Wrapping Up

The announcements at CES 2026 mark a turning point for the automotive industry. For years, we have been stuck in the "Perception Phase" of autonomy, where cars could see but couldn't understand. With the Alpamayo VLA model and the Vera Rubin chip, NVIDIA has officially moved the industry into the "Reasoning Phase." By solving the edge-case dilemmas of emergency scenes and providing the explainability needed to earn human trust, NVIDIA has set a high bar for the rest of the industry. The robotaxi may finally stop being a source of urban frustration and start being a trusted, predictable member of our transit ecosystem.

Disclosure: Images rendered by Nano Banana Pro

Rob Enderle is a technology analyst at Torque News who covers automotive technology and battery developments. You can learn more about Rob on Wikipedia and follow his articles on Forbes, X, and LinkedIn.

Set Torque News as Preferred Source on Google