Tesla Autopilot Being Revoked Needs a Better Solution

If you drive a Tesla and get "three strikes" of Autopilot getting automatically disengaged, you will be locked out of being able to use Autopilot for one week. Autopilot is not yet autonomous, but is an advanced driver assist program.

Now, it takes quite a bit of carelessness to get locked out of Autopilot. Keep in mind, that this is the car disengaging Autopilot, which means the software would have flashed on the screen multiple warnings to interact with the steering wheel and flashed that message multiple times and then made sounds.

This should be really obvious to anyone driving that they need to interact with the steering wheel. In owning a Tesla for 16 months, I have only once had Autopilot disengage, and the reason was the software was incorrect - more on that later. When you disengage Autopilot, those disengagements don't count. The driver can disengage Autopilot as much as they want.

Elon Musk States Cybertruck Will Have a Mod That Enables It To Traverse At Least 100 Meters of Water As A Boathttps://t.co/wkYcfquijG$TSLA @Tesla @torquenewsauto #cybertruck #evs #cyberboat

— Jeremy Noel Johnson (@AGuyOnlineHere) December 19, 2023

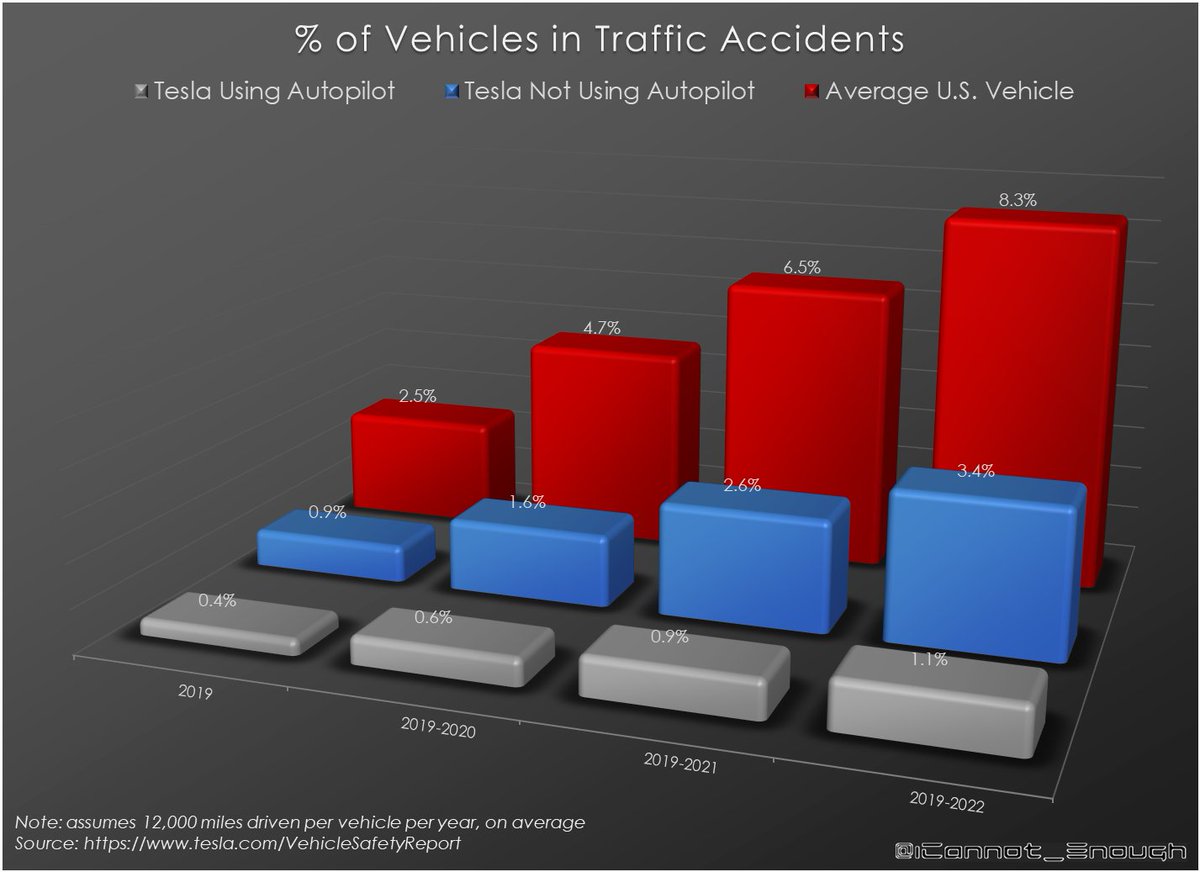

The problem arises during that week of the owner of the Tesla being locked out of Autopilot after 3 strikes. Why is this a concern? It's all in the data. Take a look at this chart that shows the safety of Autopilot -vs- not using Autopilot:

There are three rows here: a Tesla using Autopilot, a Tesla not using Autopilot, and a non-Tesla vehicle. The first row is the chance of accident when using Autopilot in a Tesla. When Autopilot is off, the data shows that an accident is more likely. It's even more likely when not in a Tesla at all.

I can attest to this. When driving a Tesla, you will get alerted with forward collision warnings, lane warnings, and alerts to keep you safe - even if they can get annoying at times.

Autopilot is even safer now that it is a single pull to activate it.

You May Also Be Interested In: Tesla Cybertruck Reservation Holders Will Need to Pay $1,000 In A Non-Refundable Early Access Deposit In Order To Take Delivery

Here's the Problem

Here's the problem - when not using Tesla Autopilot, the chance of an accident is 2x to 3x as much. Now, this is an accident, which isn't always fatal. However, an accident is no joke and can cause serious injury, harm, or death.

This is the major problem with disabling Autopilot for a week. Tesla is essentially saying to the owner, "you must now drive and be at 2x to 3x at risk of an accident, and there's nothing you can do to turn it back on."

Again, it is difficult to get three strikes using Autopilot. The software itself has to disengage, which means a driver that isn't paying attention and ignoring the warnings to interact with the steering wheel - or trying to rig the system with a device on the steering wheel.

Man Pours Water On His Tesla Charging Cable and Then Plugs In To Charge: Here's What Happens Nexthttps://t.co/9nsXkk01sO$TSLA @Tesla @torquenewsauto #charging #rain #water #evs #supercharger

— Jeremy Noel Johnson (@AGuyOnlineHere) December 19, 2023

I think a better solution to this is to send this erroneous driving behavior to some kind of agency so that they can "ticket" the driver who is exhibiting this poor behavior. Autopilot should stay enabled, but there should be some kind of fine, or warning that is delivered to the local police.

Every case should be reviewed though because it's possible the software could disengage when it's not supposed to. I remember Autopilot disengaging on me one time with a message saying it detected a device on the steering wheel when, in fact, I had nothing on the steering wheel whatsoever. The software was wrong.

Of course sending reports to the police is more paperwork and may not be feasible, but if it's not this, what other solution would work besides disengaging Autopilot? It's too safe to just turn off on people - even if they are behaving poorly.

I believe FSD also has the same strict requirements.

In Other Tesla News: Don't Forget About the Tesla Van - It's Coming and Will Be Built On the Cybertruck Platform: Amazing for Families

What do you think about Tesla Autopilot being automatically disengaged? Is this a cause for concern and safety?

Share this article with friends and family and on social media - or leave a comment below. You can view my most recent articles here for further reading. I am also on X/Twitter where I post more than just articles daily, as well as LinkedIn! Thank you so much for your support!

Hi! I'm Jeremy Noel Johnson, and I am a Tesla investor and supporter and own a 2022 Model 3 RWD EV and I don't have range anxiety :). I enjoy bringing you breaking Tesla news as well as anything about Tesla or other EV companies I can find, like Aptera. Other interests of mine are AI, Tesla Energy and the Tesla Bot! You can follow me on X.COM or LinkedIn to stay in touch and follow my Tesla and EV news coverage.

Comments

I totally agree with you…

Permalink

I totally agree with you.

Let me add that, to my opinion, there is another issue: autopilot monitors if you pay attention by pretending a difference in weight on the wheel, which is basically a mistake.

If I drive correctly, even with autopilot engaged, I use to keep both hands on the wheel, and this is not accepted by autopilot monitoring systems, which, again, pretends me to drive uncorrectly.

During the day, and if the radio/music is on, messages can easily not understood. therefore it happens that the autopilot disengages even if my driving behavior is correct, and I pay attention to the road rather than to the cockpit.

Hands on wheel should be detected differently, for instance with a pressure sensor or even with a weight sensor that works even if weight is perfectly balanced on the two sides.